Durable Object’s are powerful, but they shouldn’t be abused – and I think I was starting to abuse them. Forwarding virtually every request in the direction of a DO instead of a Worker, I’m realizing may have been a mistake. So this blog post is more for me than it is for you this time, as I rationalize the direction we should take, but as always your opinions have a home here as well.

The problem started when…

From the inception of StarbaseDB the focus has been behind utilizing the power of durable objects by Cloudflare as they provide such a unique opportunity by having compute & data shared on a machine. The sheer fact that SQL statements become synchronous and you can optionally place your own application logic near it is still a feat to marvel.

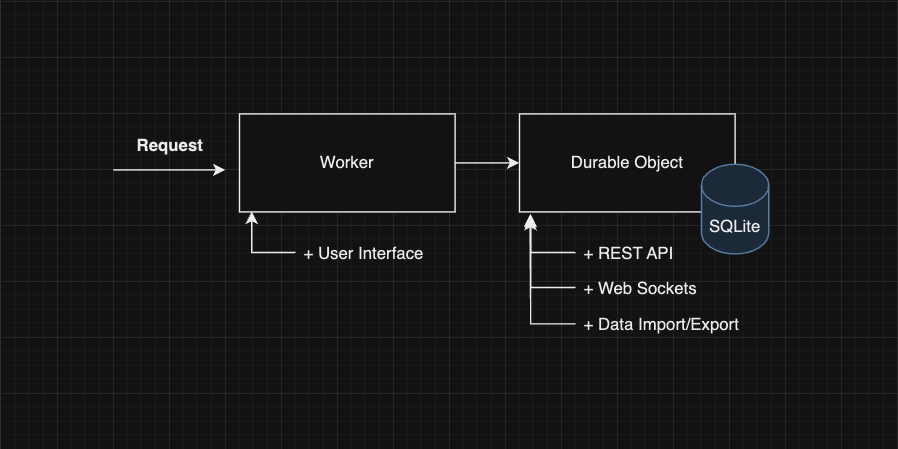

Now as many projects unfurl and more features make their way onto the roadmap the original idea often times has a need to alter its course. Today how it works is nearly every request funnels initially through an extremely lightweight Cloudflare Worker and then likely gets routed to a Cloudflare Durable Object. Whether you want to query it via HTTP, or the REST API, web sockets, or import & export data – the Durable Object will handle that. Due to how we are currently treating durable objects in our context (serving as a managed database) this design pattern is acceptable.

Then it happened. A new feature wandered into my mind and I wanted it on the roadmap. There are other SQLite offerings out on the market, but what could really take StarbaseDB and give it a super unique value proposition unlike the others? Here’s my elevator pitch:

Your database is the star of the show and you likely already have one to begin with. For any number of reasons maybe you want to develop or iterate on a new feature but you don’t want to tinker with your current existing production database yet – at least not until you’re ready! Or perhaps you’re not the technical member of your team but you still want to prototype development on top of your database without having your backend/database teams sweating with worry as to what you’re going to do. What if…. StarbaseDB could “attach” itself onto any database you want acting as a worry-free, zero hassle, extension to your database.

Now of course you could still use StarbaseDB as a standalone SQLite database offering. In addition, though, you could also have use cases for using it with your existing databases now as well. The problem revealed itself, though. Currently all my requests are being passed directly to the durable object to handle it but now if we are to potentially make requests to an optional “external” data source as well, should that live in the durable object?

Probably not.

So, what is a Durable Object?

A durable object is a single-threaded, stateful, compute and storage instance. It’s an incredible design on behalf of Cloudflare that allows you to execute compute on the same machine where your data currently exists. Without this, you would likely need to create an additional hop & skip using a traditional API layer for your application logic to exist and make requests to your data on a separate machine.

So what was once:

[CLIENT] → [API] → [DATA]

With Durable Objects can become:

[CLIENT] → [DURABLE OBJECT]

Generally speaking the latency depends on the location of where your Durable Object exists in the internet realm, especially in our case where it’s generally a single instance. If you’re in Singapore but the DO is in the United States, it could be a high latency request.

They can scale horizontally by distributing state across multiple durable objects. However, the downside of horizontal scaling Durable Objects is that you have to distribute the state across multiple objects and that means you need some resolution layer. For example if you have two DO’s that both receive identical requests to update the state (e.g. booking a seat on a plane for Row 10 Seat A) that you have to determine which request actually wins out and report it back to the correct user rather than telling both users “hey you got it”.

Okay then, what is a Worker?

A worker is a stateless compute instance that can handle high concurrency processing. What that means is if you have 1 or 100 requests incoming at once that Cloudflare can scale to handle as many requests as you want, each instance getting its own compute power to handle the incoming request. Because it’s not stateful by design, requests do not need to know about each other. This is usually unlike how you might use a Durable Object where many connections should know about each other to some capacity.

Workers can scale horizontally with ease (again, stateless). You also get the benefit of low latency globally thanks to edge distribution so your request reaches a compute instance far faster depending on where the request originated from.

What we are doing today

Today when a request is made to your StarbaseDB instance it first passes through a Worker. The worker mostly verifies that the user has the proper authentication to proceed and then passes that request forward to the durable object for it to handle the intent of the user.

Essentially any type of interaction you want to have with your data, the Durable Object is responsible for performing those duties. And you know what? It does an amazing job at that, too, given the fact it can interact currently with the only data source known to a StarbaseDB instance.

Unfortunately – the problems start to show their face when we want that same type of functionality that is performed on the Durable Object to work on other data sources outside of it.

So now we seek a solution.

How I think we should do it tomorrow

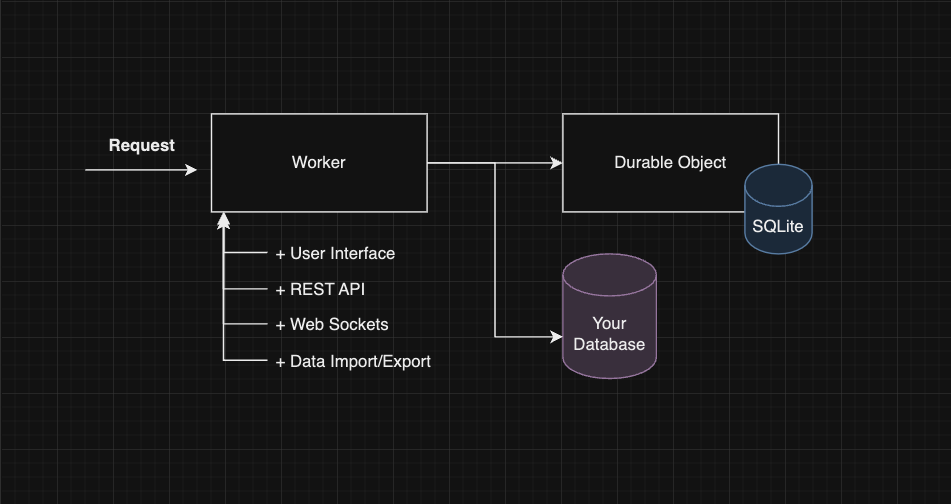

We want to supercharge developers. Currently my line of thinking is that having a “bolt-on" or attachable database to your existing development or production database can be immensely helpful in quickly iterating on ideas. Want to add on analytic tracking without impacting the performance of your Postgres database? Send that traffic to your SQLite database and don’t touch your Postgres database at all. Instead, StarbaseDB can route your traffic to the appropriate data source leaving your other databases at zero-risk.

Not only do we want to route data between multiple data sources, but we want to be able to sit on top of existing data sources and provide the same out-of-the-box experiences with no additional development resources as we do today with your SQLite database. With the proposed setup in the diagram above, now either data source can have functionality to help you out without any additional development effort.

Tomorrows architecture brings forward to the Worker a lot of the application layer logic such as the REST API, web sockets, and really anything that needs to interact with data. Since we can now interact potentially with multiple data sources the logic cannot be tied directly to the Durable Object. If it were, then all our requests would continue to be single-threaded which wouldn’t scale well for an external data source.

That all being said, I think there is still a version of the operation.ts file that exists and can stay in the Durable Object so we still benefit from synchronous database read & writes when they are choosing to interact with the SQLite data source. Perhaps a more generalized version will exist on the Worker level to interact with other data sources (Postgres, MySQL, BigQuery, et al)

Conclusion

We want to allow you to choose to use StarbaseDB to be both a standalone SQLite database hosted on Cloudflare’s infrastructure, or serve as a “bolt-on” extension to your existing databases no matter where they exist in the world. To handle this wishlist (and likely additional considerations in the future) we need to move logical components to where they make the most sense to handle more concurrency, faster speeds, and scalability.

So why are these changes important?

PROS

Can support multiple data sources which is great for quick “add-on” type solutions (e.g. user auth, analytics) or testing new features without impacting your production database

Existing data sources can now get features such as REST API out of the box regardless of their database provider

Horizontal scaling makes more sense with detached data sources (non-DO SQLite sources)

Higher concurrency where our single threaded durable object is not the bottleneck for all interactions

CONS

We start to migrate some of the application logic away from the Durable Object – but not all!

There may perhaps need to be an additional Worker installed for each StarbaseDB that handles this application layer logic as a

WorkerEntrypointNeed to move a substantial amount of code around to make it work

Join the Adventure

We’re working on building an open-source database offering with building blocks we talk about above and more. Our goal is to help make database interactions easier, faster, and more accessible to every developer on the planet. Follow us, star us, and check out Outerbase below!

Twitter: https://twitter.com/BraydenWilmoth

Github Repo: github.com/Brayden/starbasedb

Outerbase: https://outerbase.com